Install Advanced Custer Management and GitOps Operators

This section will guide you through the Advanced Custer Management and GitOps CD Operators installation and the ACM/GitOps integration in order to deploy applications multicluter.

Here’s a list of required operators to install through the Operator Lifecycle Manager (OLM), which manages the installation, upgrade, and removal:

-

Advanced Custer Management Operator

-

GitOps Operator

|

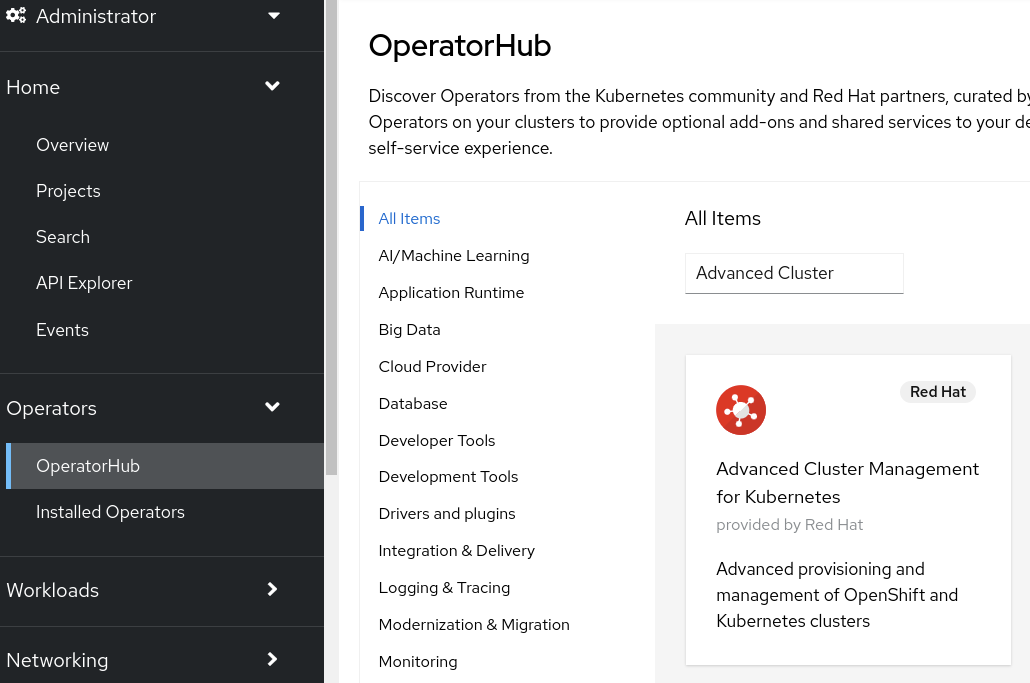

Install Advanced Custer Management Operator

We will go through the Advanced Custer Management Operator installation following below steps:

-

Enable master node scheduling

We are going to enable master nodes for scheduling workloads.

oc --context acm replace -k 02_bootstrap/base/openshift/baseLet’s check the results as follows:

oc --context acm get scheduler cluster -o yaml -o=jsonpath='{.spec.mastersSchedulable}{"\n"}'We are expecting true as a result.

| This configuration is not recommended for production ;-) |

-

Install Advanced Custer Management Operator:

Let’s install the Advanced Cluster Management Operator.

oc --context acm apply -k 02_bootstrap/base/acm_operator/baseIt will create the following resouces:

-

Namespace- open-cluster-management -

OperatorGroup- open-cluster-management-rhte2023 -

Subscription- Advanced Cluster Management Operator

Wait until all pods are in Running status and Ready. It can take a couple of minutes approx.

watch oc --context acm get pod -n open-cluster-managementAfter installing the Advanced Cluster Management Operator, we also need to create the CRD object MultiClusterHub.

-

Create

MultiClusterHub:

oc --context acm apply -k 02_bootstrap/base/acm_multiclusterhub/baseWait until the Advanced Cluster Management Operatator is Runnig status, in the meanwhile the status is Installing. It can take up to 10 minutes.

watch oc --context acm get mch -o=jsonpath='{.items[0].status.phase}' -n open-cluster-managementOnce the status change to Running, verify all pods are Running and Ready.

watch oc --context acm get pod -n open-cluster-managementAccess to the Advanced Cluster Management Web Console

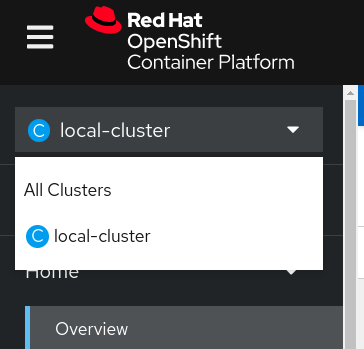

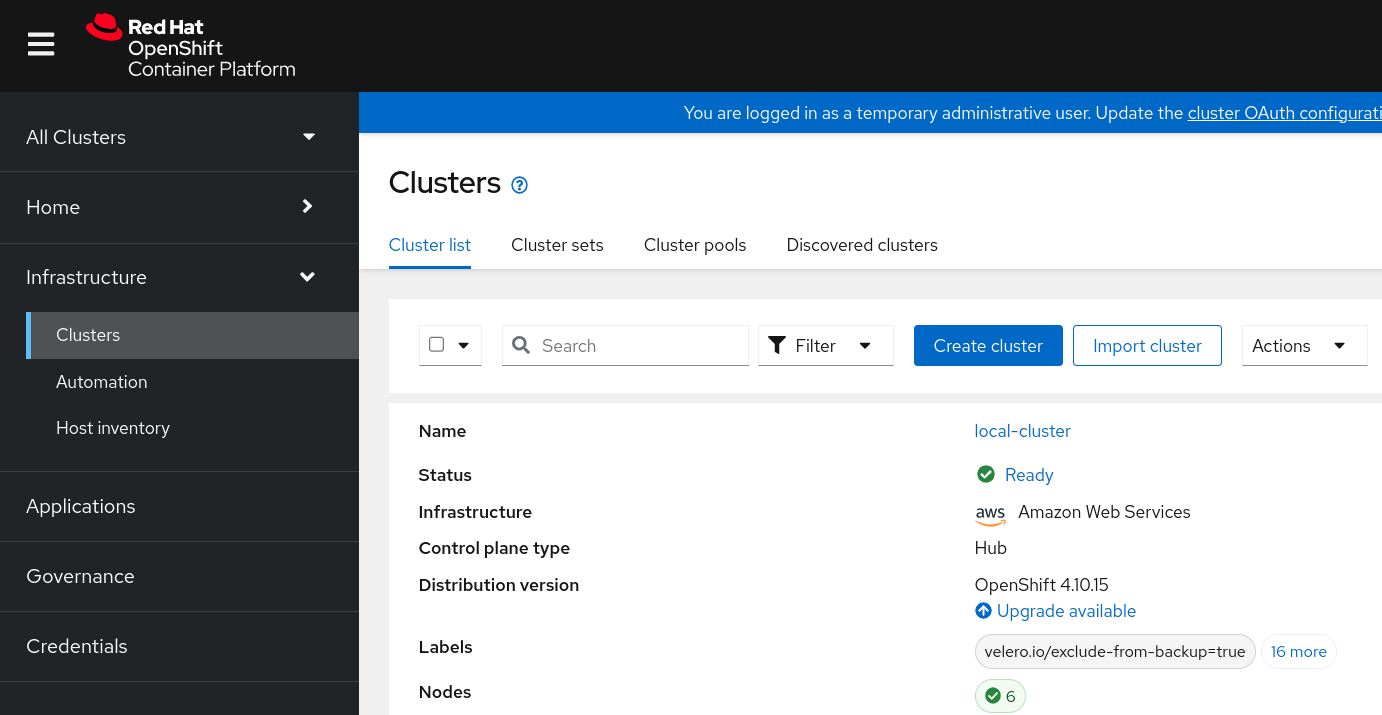

Advanced Cluster Management Console is integrated with the Openshift Console, so once the Advanced Cluster Management Operator is installed, the Openshift Console will show a new item "All Clusters" on the side navigation menu as below:

-

Access to Advanced Cluster Management Console:

-

Console: https://console-openshift-console.apps.<your_domain>/

-

User: kubeadmin

-

Password: <your_password>

-

All Cluster will provide you access to the Advanced Cluster Management Console and local-cluster will provide you access to the Openshift Cluster.

Also, the Advanced Cluster Management provides a dashboard UI. We can access it through a OpenShift Route. List routes in the open-cluster-management namespace and get the address of console https://multicloud-console.apps.<your_domain>;

oc --context acm get route -n open-cluster-managementLet’s switch to the Advanced Cluster Management Console and Login with Openshift to check that we can access properly.

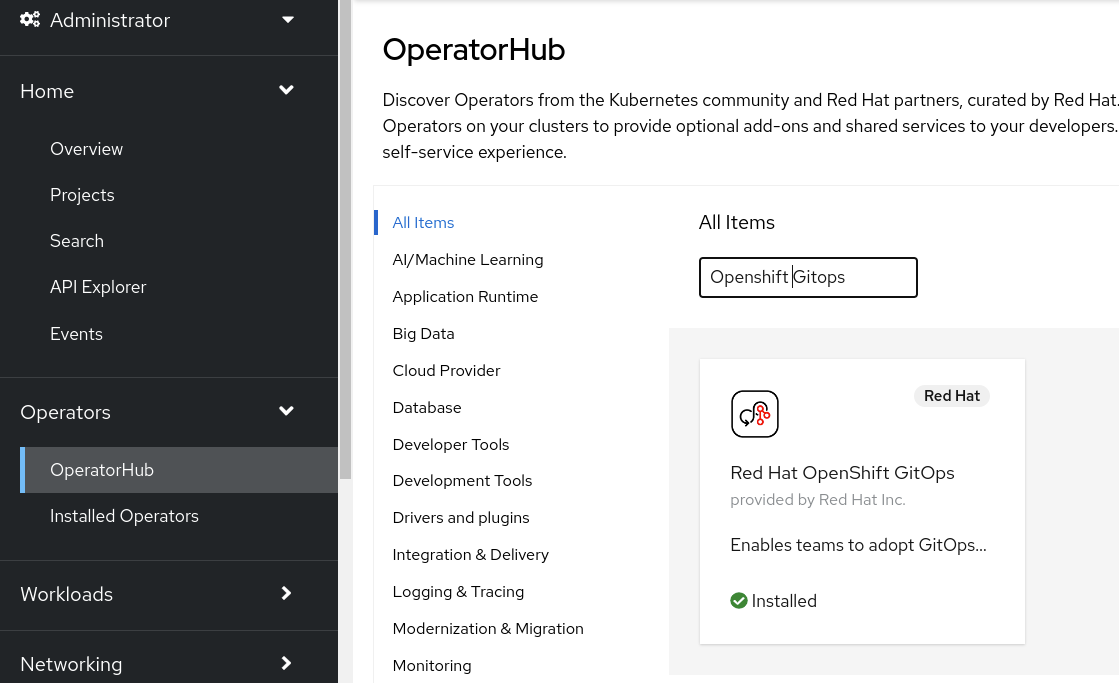

Install GitOps Operator

The next step is the OpenShift GitOps Operator installation. OpenShift GitOps uses Argo CD to manage specific cluster-scoped resources. Argo CD is a declarative, GitOps continuous delivery tool for Kubernetes.

Let’s install it:

-

Setup GitOps Operator:

oc --context acm apply -k 02_bootstrap/base/gitops_operator/base/Wait until pods in openshift-gitops namespace are in Running status and Ready. It can takes a up 2 minutes.

watch oc --context acm get pods -n openshift-gitops-

Change tracking resources from label to annotation:

oc patch argocd openshift-gitops -n openshift-gitops --type='json' -p='[{"op": "add", "path": "/spec/resourceTrackingMethod", "value": "annotation" }]'-

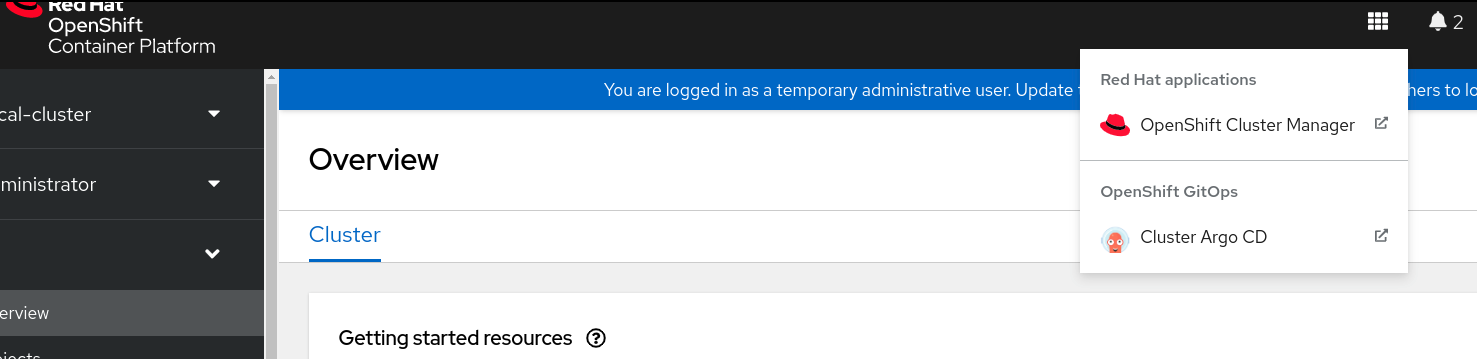

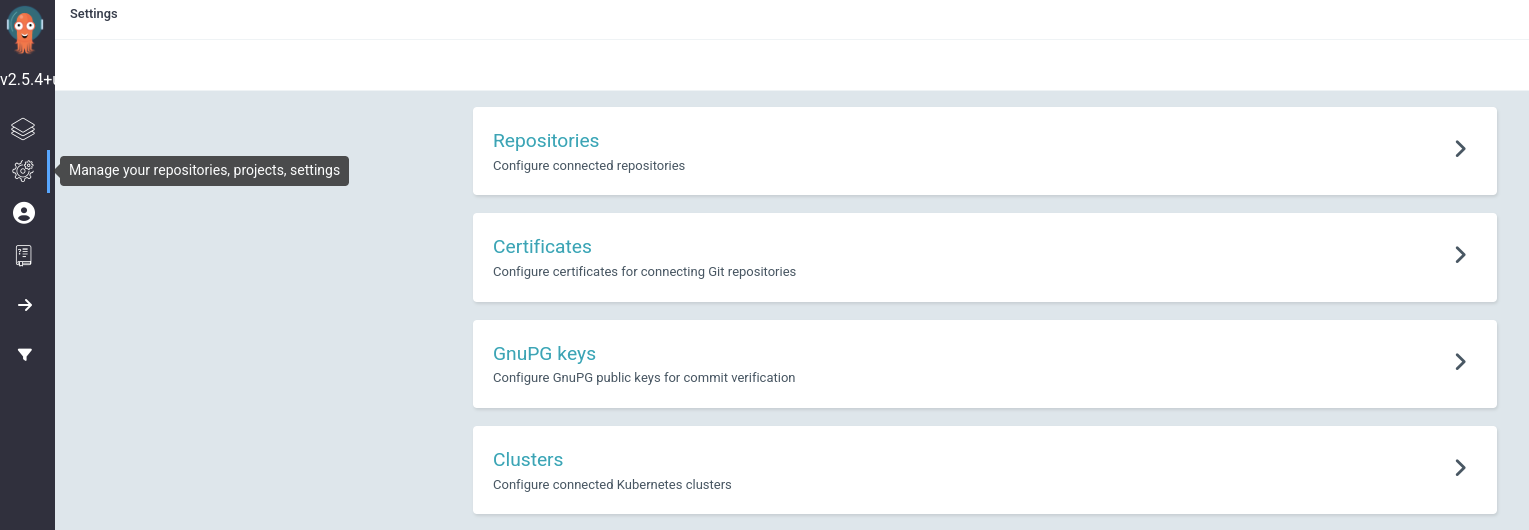

Access to ArgoCD Console:

ArgoCD Console is accessible from the Openshift Console

Login into the ArgoCD Console with the Openshift credentials.

Also, an Openshift Route is created:

oc --context acm get route -n openshift-gitopsIntegrate Openshift GitOps and Advanced Cluster Management

Once we have completed the Advanced Cluster Management and Openshift Gitops Operators installation, we will go through the integration process between them. So, this process will let us:

-

Deploy and discover ArgoCD multicluster applications

-

Setup Git or Helm Applications based repositories

-

Configure sync policies for our applications

-

Configure placement based on labels or clustersets

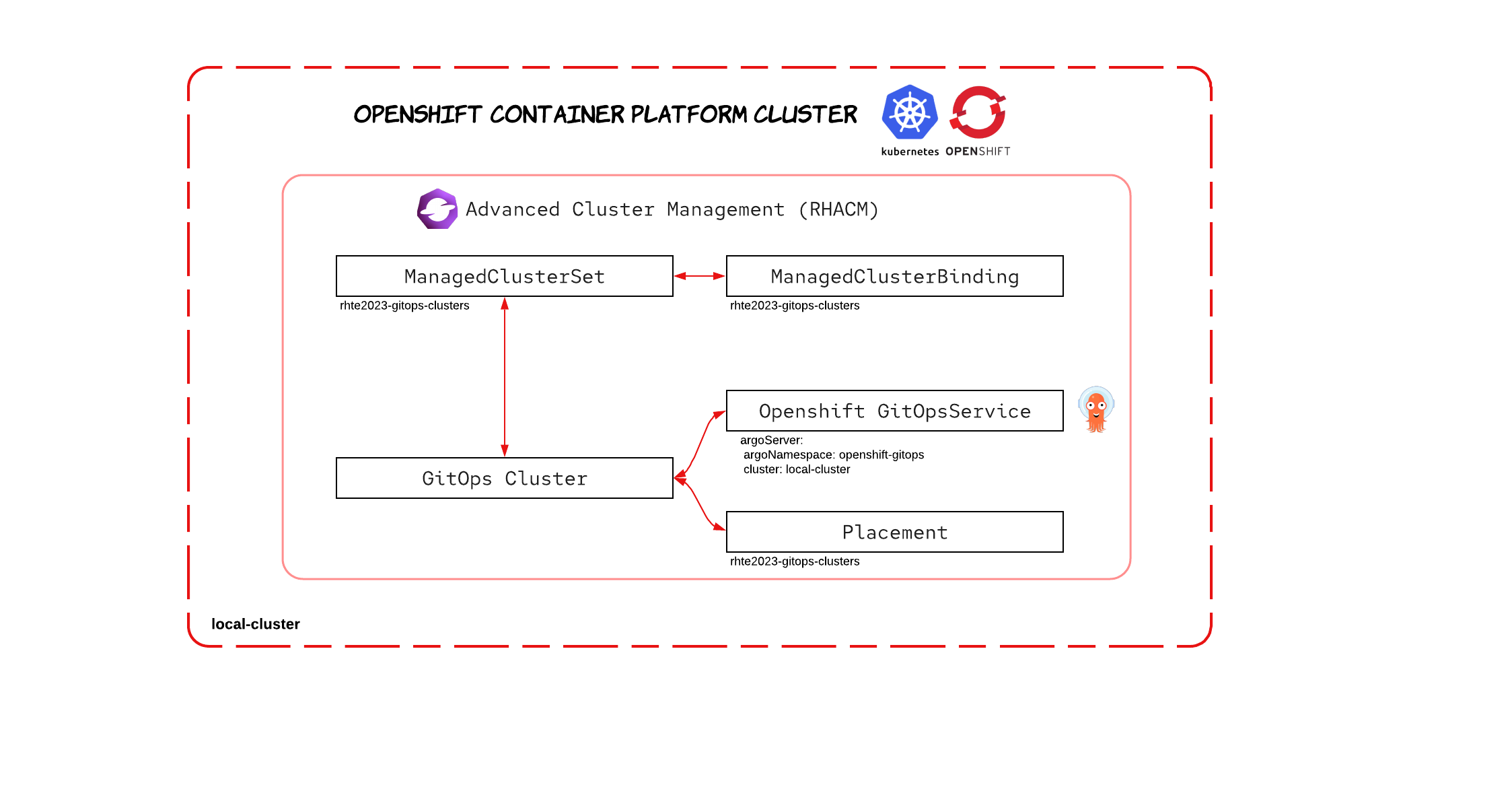

Advanced Cluster Management introduces a new gitopscluster resource kind, which connects to a placement resource to determine which clusters to import into Argo CD. This integration allows you to expand your fleet, while having Argo CD automatically engage in working with your new clusters. This means if you leverage Argo CD ApplicationSets, your application payloads are automatically applied to your new clusters as they are registered by Advanced Cluster Management in your Argo CD instances.

Let’s run the following commands to perform the Openshift GitOps Operator integration with Advanced Cluster Management

oc --context acm apply -k 02_bootstrap/base/gitops_acm/base/It will create:

-

a

ManagedClusterSet- rhte2023-gitops-clusters.ManagedClusterSetresources allow the grouping of cluster resources. We will add both Openshift Clusterlocal-clusterandrhte2023-cluster01into thisManagedClusterSetwhich enables deployment across all of the Openshift Cluster. -

a

ManagedClusterSetBinding- rhte2023-gitops-clusters.ManagedClusterSetBindingresource will bind aManagedClusterSetrhte2023-gitops-clusters to anamespaceopenshift-gitops. It means that applications and policies that are created in theopenshift-gitopsnamespace can only access managed clusters that are included in the bound managed cluster set resource. -

a

Placement- rhte2023-gitops-clusters-placement based on a ManagedCluster label vendor=Openshift and claim platform=AWS

As a Placement’s example:

---

apiVersion: cluster.open-cluster-management.io/v1beta1

kind: Placement

metadata:

name: rhte2023-gitops-clusters-placement

namespace: openshift-gitops

spec:

predicates:

- requiredClusterSelector:

labelSelector:

matchLabels:

vendor: OpenShift

claimSelector:

matchExpressions:

- key: platform.open-cluster-management.io

operator: In

values:

- AWSWe will see more Placements examples on the next sections.

| Placement Examples to select a cluster wih the largest allocatable memory and/or CPU. |

| When you integrate ACM with the GitOps operator for every managed cluster that is bound to the GitOps namespace through the placement and ManagedClusterSetBinding custom resources, a secret with a token to access the ManagedCluster is created in the namespace. This is required for the GitOps controller to sync resources to the managed cluster. When a user is given administrator access to a GitOps namespace to perform application lifecycle operations, the user also gains access to this secret and admin level to the managed cluster |

Registering local-cluster to GitOps

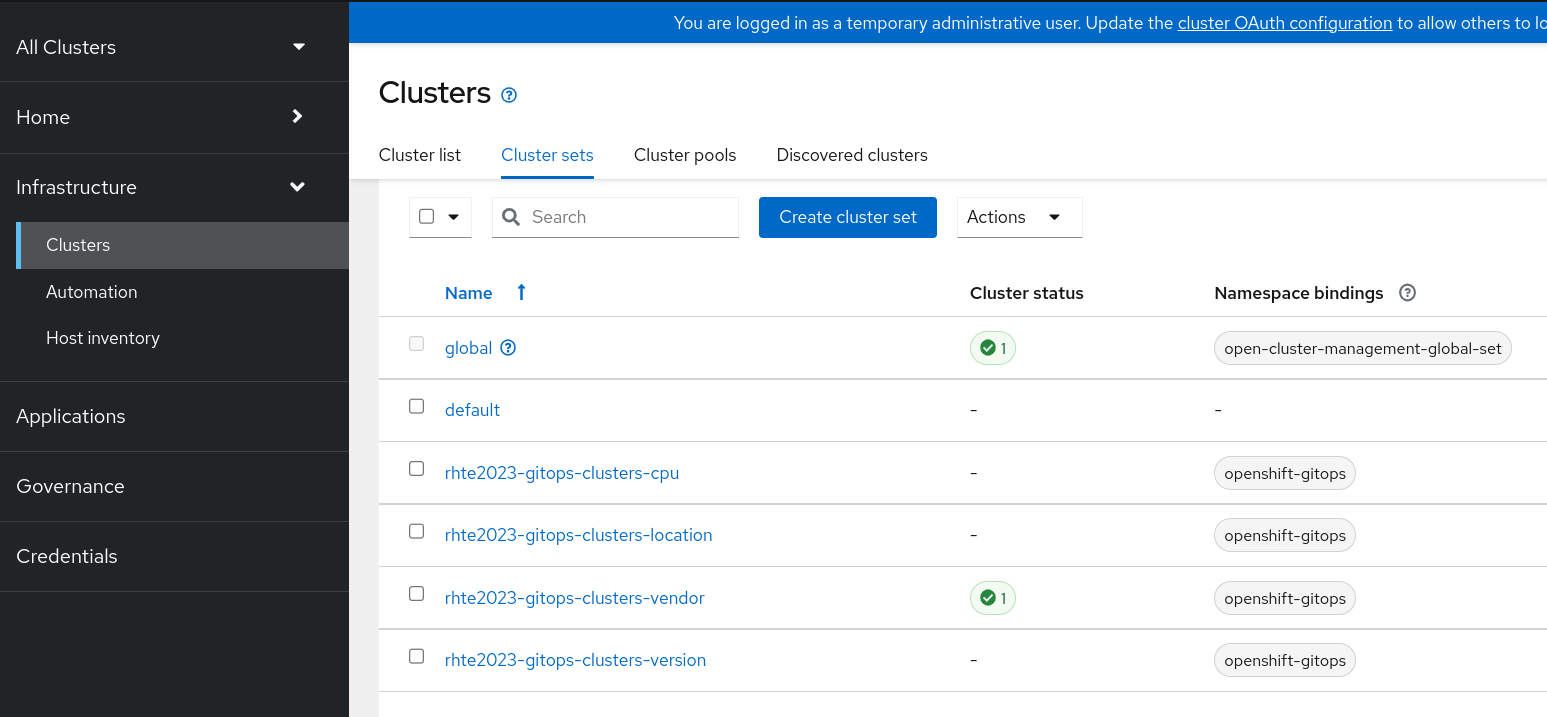

After the ACM/GitOps integration is done, let’s add local-cluster to ClusterSet rhte2023-gitops-clusters.

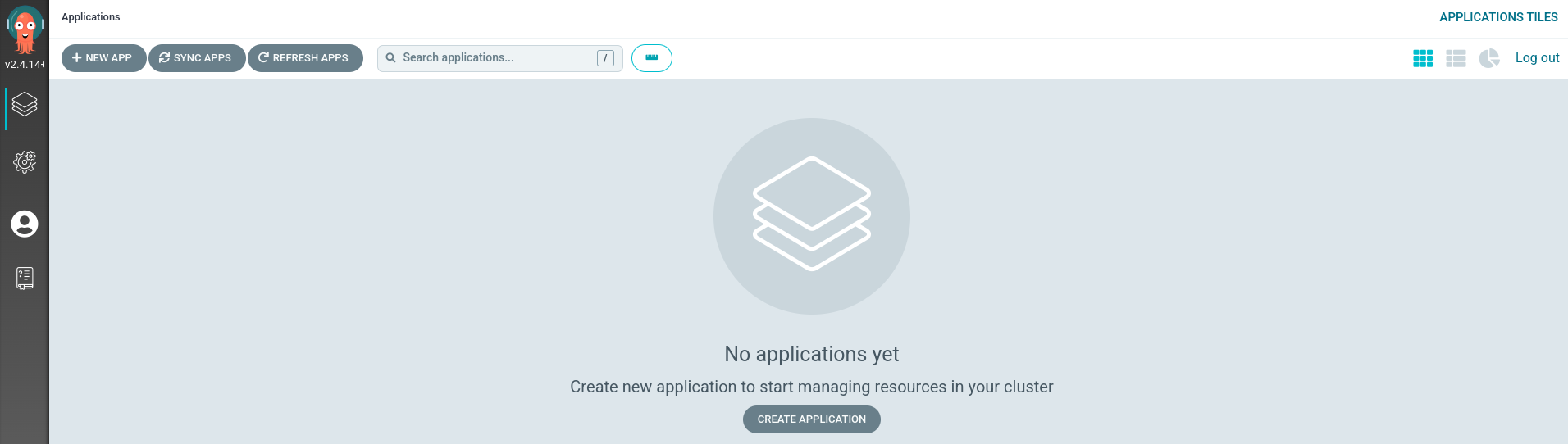

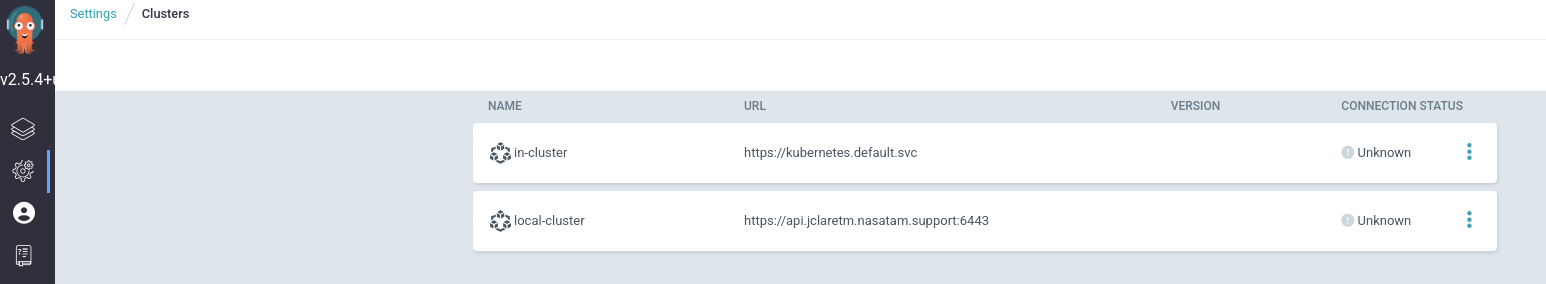

oc --context acm label ManagedCluster local-cluster cluster.open-cluster-management.io/clusterset=rhte2023-gitops-clusters --overwriteand check that cluster local-cluster is added as an ArgoCD Cluster from the ArgoCD Console > Settings > Clusters where we expect to see local-cluster as one of the clusters

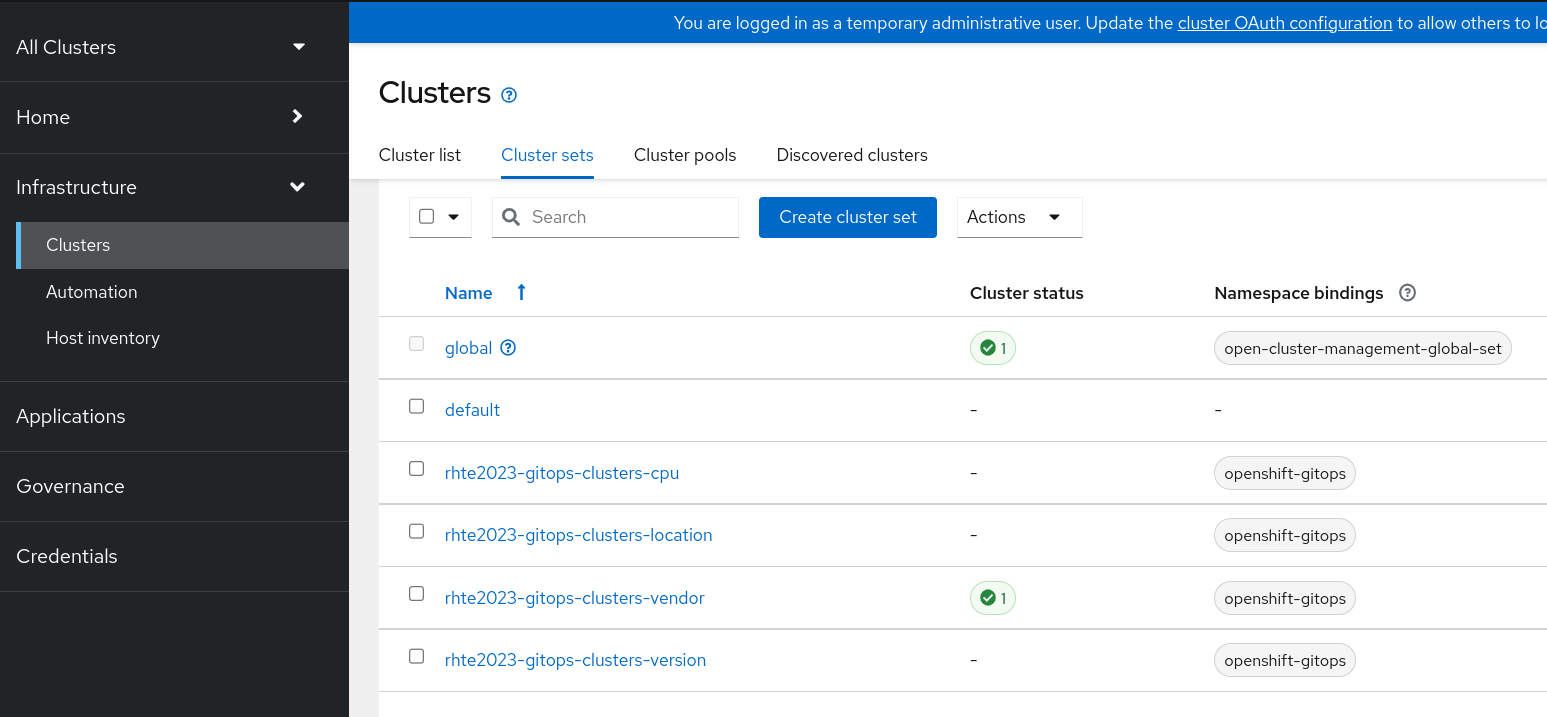

and the local-cluster being part of the ClusterSet rhte2023-gitops-clusters Cluster Set through Openshift Console > Advanced Cluster Management > Clusters > Cluster sets

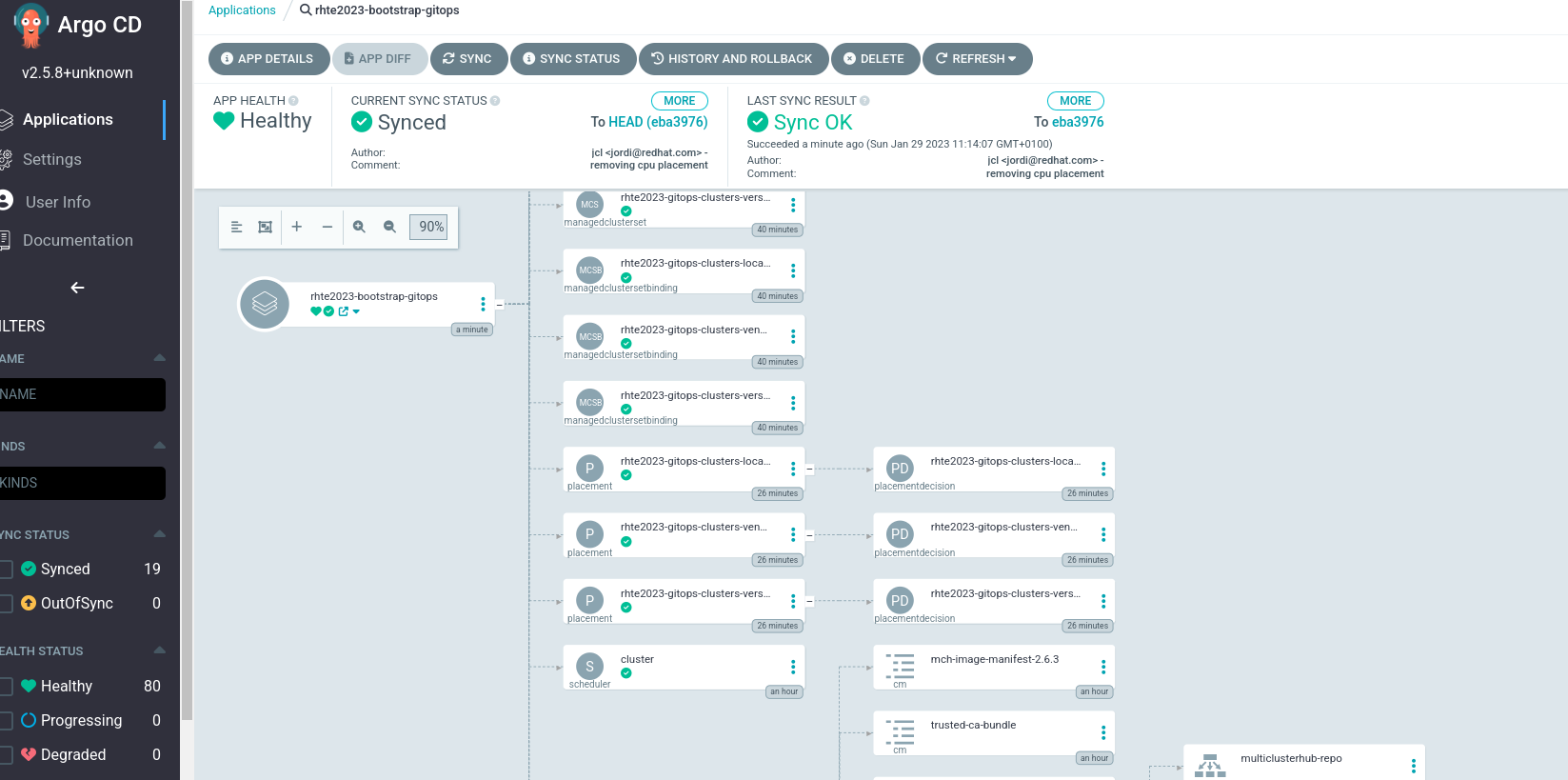

Argo CD — App Of Everything

This is the last step of this section where will automate with ArgoCD everything that we were doing so far. Let’s change the ApplicationSet YAML file according to your settings.

-

Edit the Application yaml file

vi 02_bootstrap/argocd/rhte2023-bootstrap-gitops.yamland change server to your cluster https://api.<your_cluster>:6443 and repoURL to your git repository https://github.com/<your_github_account>/rhte-2023-acm-apps.git forked previously:

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: rhte2023-bootstrap-gitops

namespace: openshift-gitops

spec:

destination:

namespace: openshift-gitops

server: https://api.<your_cluster>:6443

project: default

source:

path: rhte2023/02_bootstrap/base

repoURL: https://github.com/<your_github_account>/rhte-2023-acm-apps.gitfinally, let’s create the Application:

oc --context acm apply -f 02_bootstrap/argocd/rhte2023-bootstrap-gitops.yamlLet’s check the results from the Advaced Cluster Management Console and ArgoCD Console:

ACM > Applications > Search > bootstrap

ArgoCD Console > Applications